Local Inference of Language Models on Apple Silicon

Artificial intelligence is advancing rapidly, especially with the rise of large language models (LLMs) that can generate human-like text, assist with coding, and more.

Cloud-based LLMs, offered through APIs, provide significant benefits – they allow access to powerful models without the need for expensive local hardware and ensure models stay updated and maintained by experts. However, relying on the cloud also introduces challenges, particularly around data privacy, network dependency, and potential compliance issues when sensitive information is transmitted over the Internet. As awareness of these risks grows, there is an increasing demand for local execution of AI models on personal laptops, smartphones, and edge devices.

Running models locally reduces reliance on external servers and ensures private data remains on the user’s device, offering a more secure and privacy-preserving environment. In this evolving landscape, combining the strengths of both cloud and local execution is becoming key to building responsible and trustworthy AI systems.

MLX is a machine learning framework for Apple Silicon, developed by Apple’s machine learning research team. It offers familiar APIs across Python, C++, C, and Swift, closely following the styles of NumPy and PyTorch, with higher-level modules for easier model development. MLX operations can run on both CPU and GPU with unified memory.

Building on this, the MLX-LM library brings large language model (LLM) inference and fine-tuning to Apple devices. MLX-LM can download pre-converted HuggingFace models and run them locally on Mac hardware. It supports a wide range of models, from small instruction-tuned LLMs to larger general-purpose models, with optional quantisation for faster performance and reduced memory usage. With MLX-LM, developers can easily experiment, deploy, and interact with powerful AI models directly on their Macs.

Advantages and Challenges of Local Model Inference

On-device inference greatly enhances data privacy since sensitive input text never leaves the user’s machine. MLX-LM inference can efficiently process even personal or confidential prompts.

Running models locally offers several key advantages:

- Offline Access and Reduced Latency: Inference happens entirely on the device, eliminating network roundtrips. Prompts are processed immediately, resulting in faster response times and greater reliability, even without an internet connection.

- Cost Savings: Using open-source models locally avoids recurring API fees and expensive cloud hosting costs. This can significantly lower expenses, especially during development, testing, or high-volume production deployments.

- Customisation and Control: Local models can be fine-tuned, quantised, or modified without restrictions. Developers aren’t tied to a specific service’s model versions, upgrade cycles, or usage policies, offering complete freedom to adapt models to their specific needs.

- Technical Exploration: Local access allows researchers and developers to inspect internal model mechanics, test different quantisation techniques (e.g., 8-bit, 4-bit, or even 3-bit), and optimise inference performance — crucial for innovation and fine-grained control.

- Privacy and Data Security: Running models on-device ensures sensitive data stays local, reducing the risk of data leakage or compliance violations associated with sending information to remote servers.

However, local inference also has some limitations:

- Hardware Requirements: Running large models requires powerful hardware (e.g., sufficient RAM and fast GPU), especially for larger LLMs. Devices with limited resources may struggle with high-parameter models, even when quantised.

- Model Management: Users are responsible for managing model updates, optimisations, and security patches, which can be complex compared to cloud-managed services.

- Scalability: Local inference best suits personal, embedded, or small-scale applications. Cloud deployments remain more practical for massive-scale workloads or applications needing dynamic model scaling.

Overall, local execution is an excellent fit for private, responsive, and cost-effective AI applications on Mac hardware – but engineers must weigh hardware capabilities and management responsibilities against these advantages.

Models for Local Inference

MLX-LM can load various pre-converted models from the HuggingFace Hub, optimised for Apple Silicon. The MLX Community organisation hosts many popular models, including Meta’s LLaMA 2 and LLaMA 3 families, Mistral, and Qwen models, among others. These models are available in multiple quantization formats (4-bit, 8-bit, and others) to fit different memory and performance needs.

Quantization significantly reduces models' size, making them practical for local execution. For example, LLaMA 2 7B in complete precision (FP16) requires around 14 GB of memory, but its 4-bit version fits into just 3–4 GB while maintaining strong performance. Similarly, LLaMA 3.2-1B-Instruct is a compact 1 billion parameter model that shrinks to just 193 million parameters when 4-bit quantised – ideal for fast, lightweight inference on laptops. Larger models like LLaMA 3.1-8B-Instruct and Mistral-7B are also available in 4-bit formats, making them accessible for devices with 16–32 GB of RAM.

Good choices for local deployment include:

- LLaMA 3.2-1B-Instruct (4-bit): Extremely lightweight, fast generation, low memory usage.

- Mistral-7B-Instruct (4-bit): A strong general-purpose model that fits well on high-end MacBooks.

- Qwen-2.5-7B-Instruct (4-bit): Good for multilingual tasks and broader instruction following.

When using MLX-LM, models are automatically downloaded from HuggingFace during loading, making setup easy and seamless. For most users, 4-bit quantisation offers the best balance between memory efficiency and output quality, while 3-bit quantisation is emerging as an experimental option for ultra-low memory environments.

Code Example: Loading and Running Inference with MLX-LM

Running a language model locally with MLX-LM is straightforward. First, install the MLX-LM package. Once installed, you can easily load a pre-converted model from the HuggingFace Hub and perform inference directly on your Mac.

Install MLX-LM:

pip install mlx-lmIn Python, you start by importing the functions from the library. The load function takes the model ID from HuggingFace and automatically downloads the model weights and tokeniser optimised for Apple Silicon. MLX-LM models are stored locally after the first download for faster future use.

After loading the model and tokeniser, you prepare a prompt. If the tokeniser uses a chat template (standard for instruction-tuned models), MLX-LM can apply the proper message formatting automatically. This ensures the prompt follows the model's expected structure for generating high-quality outputs.

You then call generate, passing the model, tokeniser, prompt, and optional parameters. The response is returned as a string and is ready to be printed or used within your application.

1. from mlx_lm import load, generate

2.

3. # Load a model (automatically downloads from HuggingFace)

4. model_id = "mlx-community/Llama-3.2-1B-Instruct-4bit"

5. model, tokenizer = load(model_id)

6.

7. # Prepare a prompt

8. prompt = "Explain the basics of machine learning in simple terms."

9.

10. # If the tokenizer uses a chat template, format the prompt accordingly

11. if tokenizer.chat_template:

12. messages = [{"role": "user", "content": prompt}]

13. prompt = tokenizer.apply_chat_template(messages, add_generation_prompt=True)

14.

15. # Generate a response

16. response = generate(model, tokenizer, prompt=prompt, max_tokens=1000, verbose=True)

17.

18. # Print the output

19. print(response)When calling the generate function, you can set verbose to True. This setting enables detailed logging during the inference process. This provides helpful information, such as when token generation starts, how many tokens are generated, and the time it takes to complete the generation. It is especially useful for monitoring model performance, debugging, or simply understanding the flow of computation when working with different models and quantisation levels.

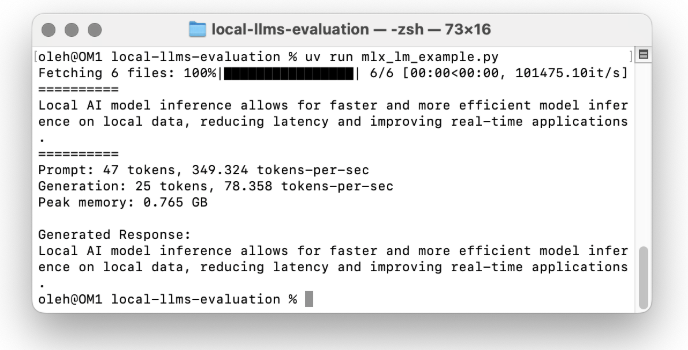

Then, you will see output in the console that gives you insights into the generation process. Example output might look like:

As shown in the terminal output, it prints the generated response, then reports statistics such as the number of prompt tokens (e.g., 47 tokens), prompt processing speed (349 tokens/sec), the number of tokens generated (e.g., 25 tokens), and generation speed (78 tokens/sec). It also reports the peak memory usage during inference. In this case, just 0.765 GB demonstrates how memory-efficient quantised models can be on Apple Silicon. This kind of verbose output is extremely helpful for understanding model performance, monitoring system load, and debugging prompt behaviour, especially when experimenting with different models or tuning generation parameters locally.

Summary

The ability to run large language models locally on Apple Silicon marks a significant shift in how AI can be deployed – bringing inference closer to the user while maintaining performance previously reserved for cloud infrastructure. What sets MLX-LM apart is not just its ease of use but its deep alignment with Apple’s hardware: models execute directly on the Metal-backed GPU stack, benefiting from low-level optimisations like fused kernels, operation batching, and lazy memory allocation. This makes it possible to achieve real-time inference speeds, even with models traditionally considered too large for edge devices.

Another powerful aspect is reproducibility. With local models, developers can freeze weights, version their pipelines, and guarantee consistent outputs, unlike cloud APIs that may silently update models. This is particularly valuable in regulated environments like finance, healthcare, or scientific research, where deterministic behaviour matters. Furthermore, by enabling full control over tokenisation, sampling, and prompt structure, MLX-LM allows for more deterministic tuning and fine-grained experimentation.

Finally, local deployment opens new possibilities for energy-aware and offline-first applications. It is critical for field devices, privacy-focused enterprise tools, or educational environments with limited connectivity. As Apple continues investing in on-device intelligence, frameworks like MLX and MLX-LM pave the way for a new generation of AI-native apps that are fast, private, and entirely self-contained.