AlphaCode 2 - better than 85% of programmers

AlphaCode 2 is an AI system created for solving programming problems. It is based on the latest LLM from DeepMind, Gemini Pro. This system scored better than 85% of programmers who participated in solving similar coding problems. Therefore, it can be said that AlphaCode 2 is better at coding than me and possibly you too.

But before we start singing about replacing programmers with AI, let's look at how AlphaCode 2 works and what it could mean for us.

How does AlphaCode 2 work?

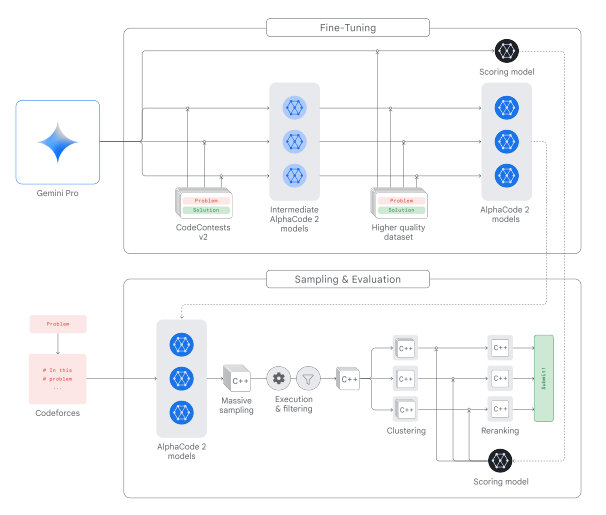

First of all, AlphaCode 2 is an AI system, not an AI model. Although it is based on modified versions of Gemini Pro (a model with capabilities roughly at the level of GPT 3.5), trained on 30 million code samples and 15,000 problems from the CodeContests dataset, that's only part of the puzzle. The whole system looks something like this:

(source: AlphaCode Tech Report)

Its operation can be briefly described in a fairly simple way, let's break it down into 4 steps:

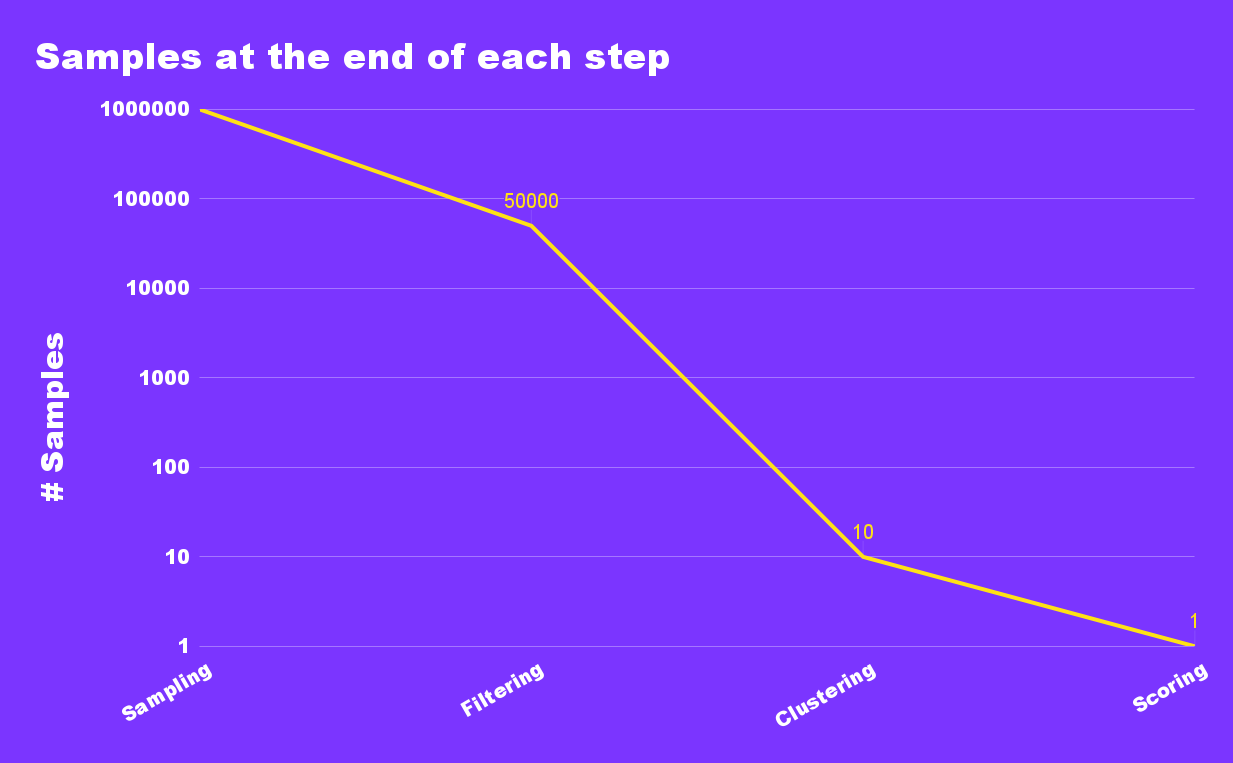

- Sample generation: For each given problem, the system generates up to a million diverse code samples, most of which will of course be worthless

- Filtering: These samples are then filtered out by running and testing them. This step eliminates 95% of the samples.

- Clustering: Potentially correct samples are clustered together, combining similar solutions, so that in the end, there are 10 code proposals to choose from.

- Scoring: Then, each proposal is evaluated by a model based on Gemini Pro, and the best proposal is presented as a solution.

The chart below illustrates the number of code samples provided at the end of each step:

AlphaCode 2 outperforms programmers

The system was created to solve complex programming problems on Codeforces and managed to solve 43% of the problems. This is a better result than 85% of programmers who participated in the same challenges. This means it's on the level of a really decent programmer.

Note that underneath, AlphaCode 2 uses Gemini Pro, so it's not even the strongest model Google has. In this case it's probably better to trade accuracy for generation speed - as system generates up to a million samples.

It is also interesting that DeepMind focused on solving new problems to avoid false positives known from GPT-4 - which could solve some of these types of problems, as long as they were published before 2021 (when the original GPT-4 training ended). For problems GPT-4 did not know from training, its success rate was 0%. This shows that AlphaCode 2 is actually good at this and is able to solve novel problems.

So what? Can we already say that AI will replace programmers?

Before we do that we need to address that AlphaCode 2 is not all roses.

Problems with AlphaCode 2

Originally DeepMind aimed to showcase AlphaCode 2 coding abilities in both Python and C++. Solutions produced in Python were worse than C++. It may be due to the fact that verifying Python code is a bit more elaborate than in C++. Given the Python results were not spectacular, they stuck with the C++ version.

Another thing is that this is still a system where almost all output is wasted. Generating a million diverse code samples requires a lot of computational power. Each of these samples then requires further analysis and processing.

The entire operation scheme is very similar to the one in the paper “Let's verify step by step”, developed at OpenAI. Such a technique generates better results, but it must be said that this is a brute-force approach and its application depends on the availability of resources. Producing even a relatively short code sample can be really expensive. At least for now.

This also means that AlphaCode 2 will probably not be widely available and DeepMind will keep it to themselves.

It should also be remembered that AlphaCode 2 was created to solve small but pretty hard algorithmic problems. However, from my experience, most problems in a programmer's daily work are rather simple, but quite bulky. I mean that the challenge is solving a problem in the entire system scope, not just coming up with a solution. Verifying the solution is also much more time-consuming, due to the need to perform more extensive tests.

So in commercial systems, it has little chance of application at the moment. It's unlikely that a system like AlphaCode 2 would be feasible to write an app from scratch.

This does not mean, however, that techniques based on this approach will not become better and more efficient in the future. Maybe even to the point where they become economically viable.

How to use AlphaCode 2?

The answer is you can't use AlphaCode 2, as it's a DeepMind internal project that is not shared with the public. All we have is a technical report. But you can try to fiddle with techniques like a majority vote to make better code with LLMs. Just keep in mind that producing output in this way is much more expensive than the standard approach, but it should be better.

AlphaCode 2 is just the beginning

The system from DeepMind shows that the LLM model itself is just one of the components, a unit that can play an important role in the bigger picture. The effects achieved by AlphaCode 2 are exciting, but at this moment they still do not change the playing field even if the system performs better than most programmers.

As we continue to study LLMs, I expect that we will discover at least a few new techniques that increase the quality of the generated responses. Maybe even some that don't waste 99,9999% of the generated answers. That would be great.