Gemini - GPT contender from Google

Gemini is a new family of multimodal AI models from DeepMind, owned by Google. Gemini is seen as Google's response to OpenAI's GPT. According to Google's promotional materials, Gemini Ultra surpasses GPT-4 in nearly every AI benchmark.

This marks a new beginning for Google in large models space, especially after the hasty release of Google Bard earlier in the year.

We'll analyze the key features of Gemini, highlighting where it truly excels and where Google's claims might be a bit overstated.

What is Gemini?

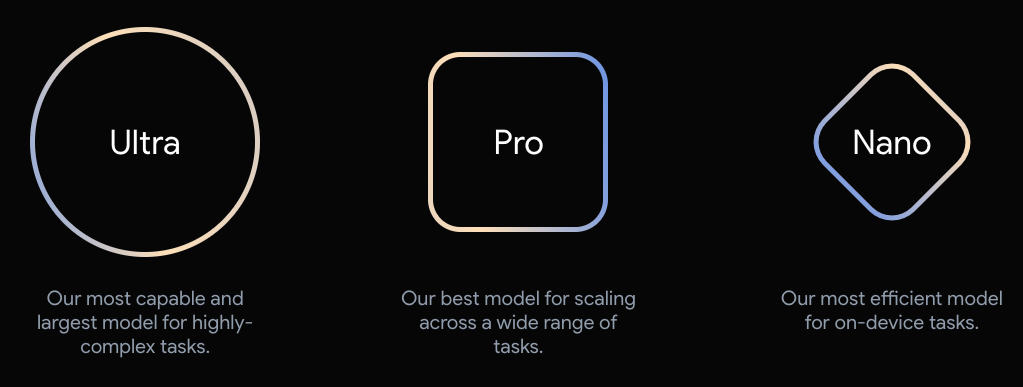

Gemini is a family of models, not just a single model, and comes in three sizes:

- Ultra: The most powerful model, set to compete with GPT-4. Its release is delayed to next year due to ongoing training and testing, especially in less popular languages where the model's security can still be easily breached.

- Pro: Roughly equivalent to GPT-3.5, it will be available on December 13 in Google AI Studio and via the Google Cloud API.

- Nano: A small model with 3.5 billion parameters, suitable for running on end-user devices. Google plans to use it on devices like Pixel phones for simple tasks like text summarization.

A key feature of the Gemini models is their built-in multimodality, meaning they can seamlessly switch between text, images, video, audio, and code.

What Can Gemini Do?

Google focuses mainly on its best model, Gemini Ultra. DeepMind boasts that Gemini Ultra is the first model to achieve 90% in the MMLU benchmark, which tests a wide range of problems. The score compares to 89.8% by human experts and 86.4% (or 87.29% as tested by DeepMind) by GPT-4. However, there are some doubts about the accuracy of the MMLU benchmark itself, making this choice of benchmark questionable.

While MMLU is a textual benchmark and Gemini Ultra's text capabilities are similar to GPT-4's, Gemini Ultra performs better with other input types. It achieves significantly better results in AI benchmarks related to video, speech, and even translation. This advantage stems from the models being designed for multimodality from the start.

This design makes tasks like generating code from images easier. Also reasoning about images and sound is much easier for Gemini:

EDIT: As it turned out, the above video does not adequately represent how Gemini works. In fact, during such demonstrations, Google used a combination of images and text. The way it looked is well presented on Google's developer blog - available at this link.

In terms of code, DeepMind also released a related model, AlphaCode 2, which is interesting enough to deserve its own article: AlphaCode 2 is better than 85% of programmers.

The context available for these models is 32,000 tokens.

What's Next for Gemini?

The new models will be available from December 13. Gemini is already powering Google Bard, Google's equivalent to ChatGPT, except in Europe and the UK where it's not available.

The exception to this release date is Gemini Ultra, which will appear early next year.

There's a sense of anticipation, as this is just a preview of what will be published in the coming days and months.

While Gemini might not directly threaten OpenAI's position, it enables Google to counter Microsoft's actions. They will have a significantly better model to integrate into their products.

Gemini's capabilities in processing images, video, and audio are particularly interesting and likely to lead to the most innovative applications.